As Apple prepares to extend its apps platform into the intimate world of Spatial Computing with Apple Vision Pro and at the same time into what it’s calling a more socially-connected FaceTime experience, the company is facing two apparent competitive threats that also represent two opposite extremes of influence.

The first threat relates to Artificial Intelligence and the second to Apple’s App Store. They might not seem related, but as media narratives they seem to be.

Both come from the same kind of thinkers who not so long ago imagined Apple would be driven out of business because it wasn’t investing all of its resources into Voice First smart microphones, and then into folding displays that promised to turn a thick iPhone into a creased iPad. Why isn’t Apple chasing all the mistakes of others?

At the same time, these same thinkers were also insisting that world governments should all force the company to manufacture replaceable battery packs like Nokia did back before phones were waterproof, to license Adobe Flash on its mobiles, to adopt mini-USB, and to provide free tech support for any sort of counterfeit components users might install inside their devices. Shouldn’t the bureaucrats who can barely balance their own budgets take over Apple’s engineering?

These are all example of tech media populism, which just like the populist political extremes of the right and left, imagines that vast and incredibly expensive and complex infrastructures should be built to solve some problem that they imagine will they will ultimately benefit from. But, this should be done at somebody else’s expense, because they really believe they need this but also really don’t like the idea of having to pay for it.

Why trust in capitalism and its exchange of supply and demand on a level playing field when you can imagine that magic solutions will happen, and this can all be ostensibly done at somebody else’s expense? In the tech industry, this kind of shallow-thought populism has regularly predicted the death of Apple for not voluntarily doing what they demand, while also predicting horrible consequences if Apple is not forced to do what they want by big governments.

It’s crazy that the people who are clearly always wrong somehow never get called to account on their errors and still get a soapbox to continue saying new things along the same facile brained lines. Nothing has surprised me more across the last couple decades of writing about Apple and consumer tech.

Apple’s failure to face strident competition in AI?

The first threat that’s become popular to fret about pertains to a collectively larger array of competitors all touting generative AI tools that Apple ostensibly lacks. All of the big tech companies, certainly including Microsoft, Meta, and Google, have jumped on the bandwagon of touting Large Language Models that can freshly generate anything from text to photorealistic images to computer code, sophisticatedly derived from the patterns of existing content.

This kind of AI promises to radically accelerate design and creation tasks of all sorts, automating various processes, and thereby slashing the costs of doing virtually anything. Apple has yet to badge its logo on an LLM the way almost everyone else already has.

If it’s hard to image that Apple lacks a suitable answer to a clearly world-changing new technology concept that promises to shake up the competitive landscape, there are easy parallels. Just think back to MP3-playing phones, third party mobile app stores, encrypted messaging, notifications, NFC and Tap to Pay, big screen phones, Voice First, tablets, waterproof hardware, smartwatches, music streaming, TV content subscriptions, dark mode photography, and of course, today’s focus on virtual reality.

Is Apple really inescapably behind in a race where it will never be able to catch up and perhaps “can’t afford to” as the analysts like to claim? Or is this just another poorly conceived narrative from rabble-rousers looking to invent a crisis to get ad clicks?

That sounds like one question I could define as simplistic populism and answer in a long, complicated history of facts. AI could probably do it for me.

Apple’s complete lack of competition in apps?

At the same time, Apple is also being portrayed as an evil and conniving monopolist exercising oppressive control over its App Stores for iPhones, iPads and of course, the upcoming Apple Vision Pro.

It feels difficult to rationalize how Apple could both be thoroughly and completely behind every other major software developer and basically “unable to innovate,” while at the same time having innovated itself into total domination over every major software developer and crafting a world where it effectively owns the very means of production. That’s because both these narratives are wrong.

Just like a lot of other problems that don’t exist, politicians are racing to offer “solutions” to Apple’s creation of the safest, most productive, and largest level playing field of a platform to ever exist in the history of computing. These ideas have little support among many of Apple’s actual developers outside of a few billionaires and their demands to make even more money by having Apple forced to subsidize their operations.

The only jurisdiction where they’ve really made progress in in the EU, which has written a law, the Digital Markets Act, that specifically seeks to isolate Spotify from paying for its use of Apple’s platform.

It’s useful to note that Apple’s platform for the discovery, sales, delivery, and updating of software apps isn’t the world’s largest by sheer download traffic, and certainly isn’t the only option for users. Google runs its own Android app platform, along with the PRC and various independent stores curated by Android makers and other alternative operating systems, from forks of Android including Amazon Fire, to alternatives like LG’s webOS on my TV, Samsung’s Tizen on my refrigerator’s display, Tesla’s on my vehicle, or BMW’s on my last one.

The fact that virtually all of these app markets are terrible in comparison to Apple’s isn’t a problem for governments to solve. Instead, it’s evidence that Apple’s approach to building— at great expense— a functional marketplace that takes notice of the interests of both developers and customers was a great investment for the company and good for society in general.

Other makers should devote similar efforts to building great app markets!

Why aren’t other app stores better?

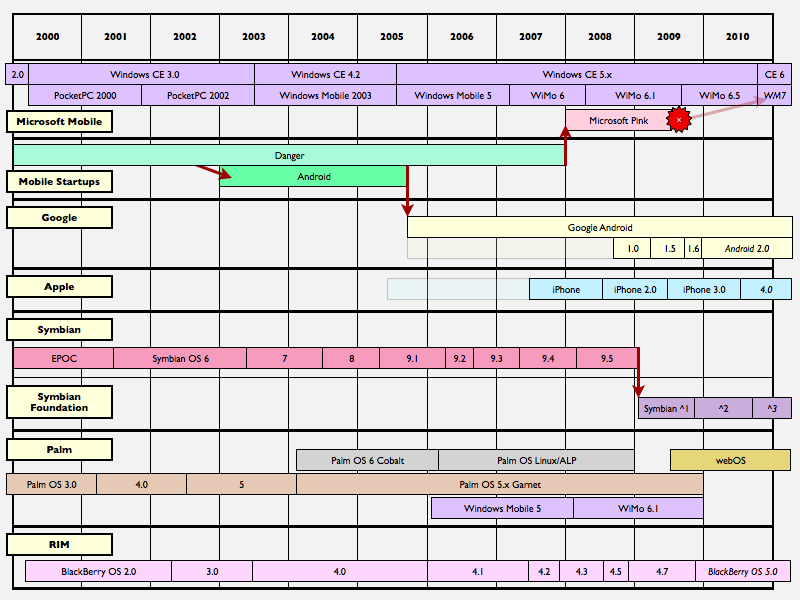

Microsoft created an app system for Windows patterned after the App Store, and also attempted to launch a mobile software store for its ill fated Windows Phone platform. A major contributing factor to the demise of Windows Phone was that its app platform wasn’t very good. Microsoft struggled to attract enough developers to build and maintain sufficient apps for its mobile devices, highlighting how critically important is is for a device maker to devote enough attention to keep their app platform attractive, functional, diverse, and complete in its offerings.

A major reason that Android, Fire, Tizen, and other platforms have struggled in their efforts to unseat Apple and its App Store is that very same competition that Apple has exerted in building a better complete solution that attracts more buyers and encourages them to spend money on apps.

The tech media often likes to describe Apple’s success with the App Store using pejorative terms ranging from “Walled Garden” to “sticky ecosystem,” as if we are British children forced to enjoy roses where you can’t hear traffic, or alternatively flies stuck in glue trap and dying in place because its so easy and convenient to buy apps from Apple that we have no other choices and are stuck there, miserable in a paradise we might die in.

What’s their ugly phrases to describe the miserable experience of Windows and Android malware and spyware surveillance? There curiously aren’t any, just the ideas of freedom and openness. Isn’t the App Store the really free and open choice, due to Apple’s efforts to keep big developers from exploiting its users?

Erase all the slanted narratives and it becomes clear: Windows was corruptly totalitarian, Android is chaotic communalism, and Apple is delivering the most ideally centrist solution to personal computing. Apple has delivered free but regulated markets that cater to individual demand rather than power centers of administrative right or the idealistic left.

Apple has become the epitome of American strength through diversity, and the results are so desirable that everyone everywhere is trying to copy the company.

Listen to the music

Rather than Apple being punished for putting in the work to build something beneficial, its competitors should be punished for failing to invest similar efforts and resources into their own stores. Financially they are indeed missing out, thanks the choosy, collective hands of individual customers making use of the App Store, without any involvement of government decree picking the winners or losers.

That makes the EU’s recent efforts to prop up their home field corporation Spotify as a “winner” in selling streams of songs that pay very little to artists— a very big mistake by big government. By effectively pushing Apple to support Spotify’s distribution on its own platforms for free, the EU is punishing a successful investment by Apple’s App Store and instead coddling failing business decisions that have actively damaged the music industry while billing customers for an ephemeral service that will be worthless to them as soon as Spotify goes out of business.

Imagine if the United States had jumped in propped up Napster’s file sharing business as a “solution” to the decline in CD sales two decades ago. That would have been popular among people who didn’t want to pay for music, but it would have been disastrous for music and musicians and everyone employed by the industry, and eventually everyone who wanted to enjoy the creation of new commercial music.

The real solution to the crisis hitting the music industry around the year 2000 was for a capitalist to build a real market solution that worked for buyers and sellers. That solution happened to delivered by Steve Jobs’ efforts to build an iTunes Music Store.

More than just a store, this initiative involved lots of efforts by Apple to both convince music sellers to accept reasonable conditions, such as to sell single track sales for a fair price, and also sufficient consumer friendly marketing that explained why customers should pay a reasonable price for music protected by FairPlay DRM instead of downloading bootleg songs.

Neither the music studios nor the public — and certainly not the populist tech media — were entirely happy about this compromise, but that’s usually the sign of a good negotiated agreement. The result dramatically helped Big Music survive the implosion of the CD business while also enabling new smaller independent music makers find big new audiences for their work who could support it financially.

It was Apple’s creation of a real market for music downloads that enabled Spotify to launch its alternative of music subscriptions where you rent access to songs. Spotify made itself a landlord of content it doesn’t even own, and gave itself unilateral power to modify its leases as desired. It still isn’t making money on this “business” so it wants a subsidy from the directory that it gets its tenants from. So it ran to the group that tried to mandate Mini-USB and the last cable users would even need.

Watch for the ads

“File sharing” as introduced by Napster and others did none of the things Apple did to launch iTunes as a legitimate market for music. Instead, it diverted tons of money upward to greedy startups who build short term, shallow, and temporary robber baron dams that aimed to siphon off as much money from music distribution as they could while contributing as little as possible to the industry and doing close to nothing for end user customers apart from using them as advertising eyeballs.

Surveillance advertising is a key sign that a business model is flawed. If customers will only use a product if it’s covered in advertising and collecting data to support more advertising, it’s probably not a good product.

If people will pay a premium for a product with little or no ads connected to it, then a working market has been created. This is evident in the history between Macs and Windows PCs, between iOS and Android, between Apple TV and Netflix, between Siri and Alexa, and nearly everything I’ve written about in the last two decades.

But right now, I’d like to explain what this has to do with Apple’s App Store. For that, we need to see where it came from.

Bear with me for a review of the facts that so many populist tech writers have conveniently forgotten as they recommend and endorse the idea of big government intervention in personal computing software sales.

The world before the App Store

Apple’s modern App Store has only existed since 2008. That means the company was selling computers that ran software for nearly 30 years before it deemed it necessary to develop a software store that imposed restrictions and responsibilities on third-party developers. Why not stage a populist revolution and go backwards into the wonderful good old days of “open software?”

While the App Store was still under development, Steve Jobs pointed out that the then new iPhone would need more protections in place to due its location services, always-on mobile network, its camera and microphone, and other factors that made it very different than the 1980’s model of a PC.

Malware infected PCs could destroy your Office files and cause frustration. They couldn’t track your exact location and record your activities at the supermarket and at home and assemble a very detailed profile of all of your activities and associations in a way that would make East Germany’s state surveillance look unsophisticated.

Across several years prior to the release of iPhone, the Mac — and to a much larger extent, the Mac’s doppelganger from Microsoft known as Windows — were already suffering a full blown crisis of malware threats. Increasingly ubiquitous networking had helped create the perfect Petri dish for planting malicious code and creating increasingly sophisticated networks of bots that had infected individual’s personal computers and corporate fleets of PCs.

They weren’t just stealing marketing data. Malware was beginning to be assemble captive PCs into remote controlled bot networks that could be used to deliver enormous denial of service attacks that could bring down companies, wreck industries, threaten governments, and aid terrorist factions.

If this reality moved from a threat posed by a billion Windows PCs to what would become hundreds of billions of mobile devices, it would create global chaos.

The early PC’s naive openness was being exploited on an industrial scale, and the only defense seem to be antivirus software that could be installed to scan for known malware and perhaps block or limit open firewall ports or other vulnerabilities. These solutions taxed performance and required regular updates, and often installed other cruft that was essentially also malware to generate revenue from the task of blocking other malware.

Apple successfully begins sales of security and privacy

Apple’s Mac had some natural immunity from the malware designed specifically for Windows. It was also safer just by virtue of being a smaller platform that offered less commercial payout for exploiting. Why write malware specifically for Macs when a vastly larger target existed on Windows, which was much easier to exploit anyway?

Across the early 2000s, Apple touted the Mac as a safer more secure platform, particularly in comparison to Windows PCs that were being hobbled by an outbreak of malware that Microsoft was struggling to gain control over. The solution that Microsoft devised was a new “trustworthy computing” architecture known as Palladium that intended to retrofit the hardware and software of the PC with authentication systems that could police malware and kill vectors for spreading it.

Through Palladium, Microsoft planned to strengthen its hand on the policing of malware.

The downside, of course, was that Microsoft had already earned a reputation as a ruthless monopolist that killed competition and “embraced and extinguished” open standards to promote Windows as the only way to do anything. It had effectively derailed the newly developed open web browser to force the web to instead serve as just another part of the Windows API.

Microsoft had done everything it could to stifle software development on other platforms, including Apple’s Mac. Why would anyone trust Microsoft with the power to enforce software security?

Microsoft had proved itself to be an untrustworthy partner who didn’t care about the needs of end users and flouted the laws of the land. The only reason anyone did any business with Microsoft was that it was the only game in town, because it had erected vast walls to prevent any competition to Windows at any level.

Additionally, Windows itself wasn’t very good. This turned out to be part of the solution of what to do about malware and Microsoft both.

What solution to remedy Microsoft’s monopoly abuse?

The Clinton Administration had just sued Microsoft over its monopolistic behavior in the ’90s. The court ultimately determined that the company was in fact abusing its monopoly over PC operating systems. The question left was how to rectify this issue and level the playing field to promote healthy competition in the interests of end users, developers, and hardware makers.

One of the proposals was to break up Microsoft the same way that AT&T had earlier been busted up into a series of “baby bells” with the intent to create competition for long distance phone service.

In retrospect, this solution would likely have been disastrous. Rather than reducing Microsoft’s control over personal computing, it would more likely have entrenched it, merely adding more bureaucracy and complicating the way that the various broken-up parts of Microsoft could productively work together and with partners, competitors and end users.

The imagined efforts to control and police Microsoft could effectively make the government a parasitic partner of Microsoft and contribute to its exercise of control of the industry and the users of PCs, making things worse rather than better.

It turned out that the government ultimately did nothing. Instead, rather than being solved by “the government being here to help,” Microsoft’s monopolistic control over personal computing was initially threatened and then dramatically crushed by Apple and the functioning of competition in open markets.

Nobody would have believed this could possibly happen back in 2000.

Get a Mac, Rip Mix and Burn

After innocuously teasing the PC monolith with its “Get a Mac” marketing, Apple released iPod and eventually its iPhone and iPad, incrementally offering a better solution to the question of where personal computing should go. We can only hypothetically imagine what government intervention might had done to Microsoft and its world of Windows PCs, but this would all probably have been handled with the same incompetence as Covid-19.

Yet we are now living in a solution where the largest personal computing platform is being run by Apple, and rather than acting to destroy all competitive threats to its phones, tablets, and Macs and force every end user and company to exclusively buy everything from only one source, Apple simply offers what it thinks is the best solution in competition with Microsoft in PCs, with Google and its Android and Chromebooks, and global competitors offering everything from watches to TVs to phones, tablets, and an array of computing devices and appliances that run a pantheon of operating systems and App Store alternatives.

Apple’s iTunes is offered for Android and Windows under the new branding of Apple Music. Apple services from Apple TV to AirPlay are broadly licensed across various competitive TV and audio device makers.

Conversely Google, Microsoft and thousands of other competitors host their own competing apps and services on Apple’s App Stores. Not only is Microsoft’s monopoly effectively gone, but the very goal of erecting abusive monopolies has fallen out of favor.

Why win all alone as an oligarch when you can participate in a functional marketplace where everyone wins together?

Today Apple is much more profitable that Microsoft ever was at the height of its monopolistic control of personal computing. Even Microsoft is vastly more profitable as a player in competitive markets than it was at the helm of its peak dinosaur era of royal tyranny.

Open competition solved monopoly abuse

As a dinosaur monopolist in the early 2000s, Microsoft at first didn’t take Apple very seriously. As iPod began to suggest a threat to Microsoft’s ambitions for Windows Media Players and its PC-like platform of hardware makers selling Windows-branded MP3 players, the empire struck back with initiatives to kill off Macs and iPods by exerting control over the availability of music and video.

Microsoft DRM intended to lock up digital content so it would only efficiently play back on Microsoft-approved hardware.

Initially, Apple could promote Macs and iPods as being able to rip music from CDs, creating personal catalogs of music for iTunes that Microsoft couldn’t block. But the movie industry was working to make sure films couldn’t be so easily moved around, because freeing the content from its disc hardware would facilitate file sharing, flooding the internet with bootleg copies that would kill the value of commercially recorded movies.

That was already occurring with digital music on CDs, prompting parallel efforts to devise a next generation CD format that similarly couldn’t be ripped for use on a computer or media device. Microsoft and Sony were both pursuing DRM formats for discs that would empower unreasonable restrictions on users and likely make it very difficult for alternative platform vendors like Apple to ensure their users could still access music and the future of videos.

In response, Apple decisively worked to build an iTunes Store, initially as a way to ensure that its users could buy music, and later TV shows and movies, for use on Macs and iPods. The iTunes Store wasn’t created to be a profit center; it was run strategically to keep music and later movies available. Shortly before the iPhone appeared, Apple began experimenting with iPod Games, effectively software titles that were sold in the same model as iTunes songs.

The problem of too much open

When Apple introduced iPhone in 2007, the company initially indicated that the platform for creating third party iPhone apps would be built though web apps, enabling anyone to build software that could run on iPhones with minimal restrictions apart from those familiar to the web as a platform. Apple had already invested in building its own Safari web browser, initially with the goal of ensuring that web apps and websites in general would work well on the Mac, regardless of what Microsoft or others might do to the web if they controlled the web browser.

The web as a platform had initially been built to be an alternative to Windows and its monopolistic control of the personal computer. Microsoft tied the web into Windows to neuter its threat. But the web struggled to soldier on as a cross-platform alternative to the Win-32 API that Microsoft owned.

Just as iTunes acted to keep music open and available for Mac users, Safari did the same for web apps on the Mac.

The web had been designed to handle a host of security and privacy issues. Web apps couldn’t just copy data to your system without restriction or access system files or read your personal data files, the way native PC software you installed as an administrator generally could.

The web was firewalled by design, as if sandboxed above your personal computer to help prevent any malicious web code from exploiting your security and privacy. But this control also reduced the efficiency and performance of web apps designed to work within these limits.

Critics initially howled at the prospect of third party iPhone apps being limited to just web apps. Apple’s own software titles for iPhone 1.0 were sophisticated and far more powerful and clearly easier to build than web apps limited by the basic, generalized design of the web that made “running anywhere” more important than “look and feel” and overall performance and power.

There are people today who believe Apple totally got this wrong and was forced to backtrack and build an App Store for native iOS apps due to vocal protest.

It’s no conjecture that Apple had been working to build an App Store beyond web apps. Steve Jobs publicly stated that the company understood the benefit of native local apps and was working to develop the technology to secure such apps for iPhone.

Months before the iPhone launched, Jobs specifically mentioned efforts by Nokia and others to encrypt native apps for secure delivery so that the phony apps, malware, and spyware that had grown into an epic pandemic on personal computers wouldn’t do the same thing to emerging mobile devices.

Beyond being a nuisance for users and a threat to their privacy and security, a wide open, unrestrained model of software borrowed from the ’80s PC would result in the same issues that had plagued the CD in music. Bootlegs, “file sharing,” counterfeits, and poor quality copies began to destroy the entire retail system supporting content creators and everyone else involved in producing commercial music content.

Apple didn’t invent the underlying concept of the competitive App Store with restrictions to protect the platform, its users, and developers. Vendor-secured software titles had existed in some form in video games since the dawn of personal computing. On consoles, the first Nintendo console titles were licensed by the company to reign in problems related to poor quality titles that threatened to cause hardware damage or sully the reputation of the gaming system, or to destroy the commercial underpinnings that supported game development and availability.

Sony’s Playstation and Microsoft Xbox similarly imposed strict control over their own game consoles to prevent bootlegs, knockoffs, and the glut of low quality titles that had once nearly destroyed personal video gaming with the bust of Atari 2600 in the early ’80s, contributed to by the “open” bins of crap software cartridges the company failed to get under control.

In the emerging world of early smartphones, the same issues played out. The “open” and unrestricted copying of apps on early mobile platforms like Palm made it effortlessly easy to trade around titles, erasing any reason to pay for them.

Those who wanted to pay for a valuable mobile app were relegated to tiny niches of demand, causing basic titles for business or professional use to be priced very high. Everything else declined into shareware, making it virtually impossible to create a real business building mobile software.

Users were deprived of choices beyond shoveling through Atari-like bins of unfinished junkware, or paying through the nose for very expensive, low volume software tiles small developers were trying to pay their rent with.

The familiarity of stealing software downloads in the model of music “file sharing” had reached a crisis just as miserable as Microsoft’s monopolistic control of Windows PCs and the malware and spyware it caused. Software was becoming difficult to sell for developers, and it was equally difficult for end users to buy functional, attractive, complete, and well supported software.

The compromise between a dictatorship and chaos

A number of companies tried to find a solution to these extremes. In addition to Nokia’s work in building a store for encrypted mobile apps, Danger also operated a store where apps could be securely downloaded. In 2008, Apple opened its new App Store, which just like iTunes had done for music, would bring mobile apps with reasonable security and privacy restrictions to iPhone at much lower prices though high volume sales.

Many of the same critics of iPhone web apps in 2007 imagined that the real solution would be third party app stores and wide open online downloads offering unrestricted access to anything, including titles that Apple expressly didn’t want in its App Store. That included copyright violations, knockoffs and bootlegs of existing apps, along with apps that hacked into iOS to expose features that might change, causing stability problems and other issues.

Over time, Apple’s efforts to secure its iOS platform, along with its efforts to address the concerns driving users to want to sideload apps, effectively killed mainstream interest in alternative app stores. Much of the plumbing Apple built to secure the iPhone and its App Store, and to prevent fake apps from running, were drawn along the same concepts as Microsoft’s Palladium from years prior.

Users and developers were more willing to trust Apple because it had earned a reputation of working in the interest of its customers and partners. The App Store design and its security systems allowed developers to finally be able to build real businesses while selling their software titles for relatively little. End users benefited from enormous choice at very affordable prices.

Alternatives were not better

Conversely, Google promoted the idea that a wide open software model with little quality control and few restrictions would be better. Yet a decade and a half later, it’s impossible to argue that Google was right. It has presided over a reckless festering dump of ripoffs and bootlegs riddled with nearly as much malware and spyware as Windows from 20 years ago.

Android developers can’t earn sustainable revenues from app sales the way they can on iOS, so they have to make up for it by inserting advertising. And ads are only really profitable if they’re supported by surveillance that can siphon up enough data to report effectiveness.

That’s a privacy issue as well, and it results in low quality titles that focus on their real client, which is advertisers, not end users.

Microsoft closely copied Apple’s app store model for Windows Phone, but its half-efforts weren’t able to attract enough attention from developers already happy with iOS or content with the alternatives of Android. The web app remained an option for building general tools that could work cross platform.

A variety of companies have launched their own platforms with their own apps stores for everything from video game consoles to cars.

Never before have world governments decided that Tesla should be forced to install Apple Maps or CarPlay on its cars, or that Sony should be forced to distribute game titles from a developer who doesn’t want to pay for access to its development tools and follow its store rules, or that the New York Times should be forced to distribute my articles because it has such a big platform and I have something I want to say, but I don’t want to pay to advertise my thoughts.

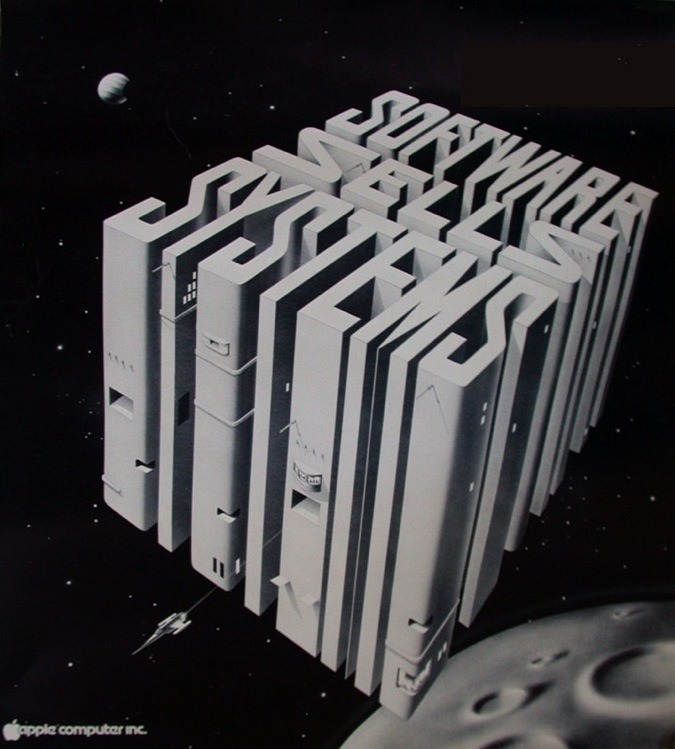

Apple redefined software 40 years ago

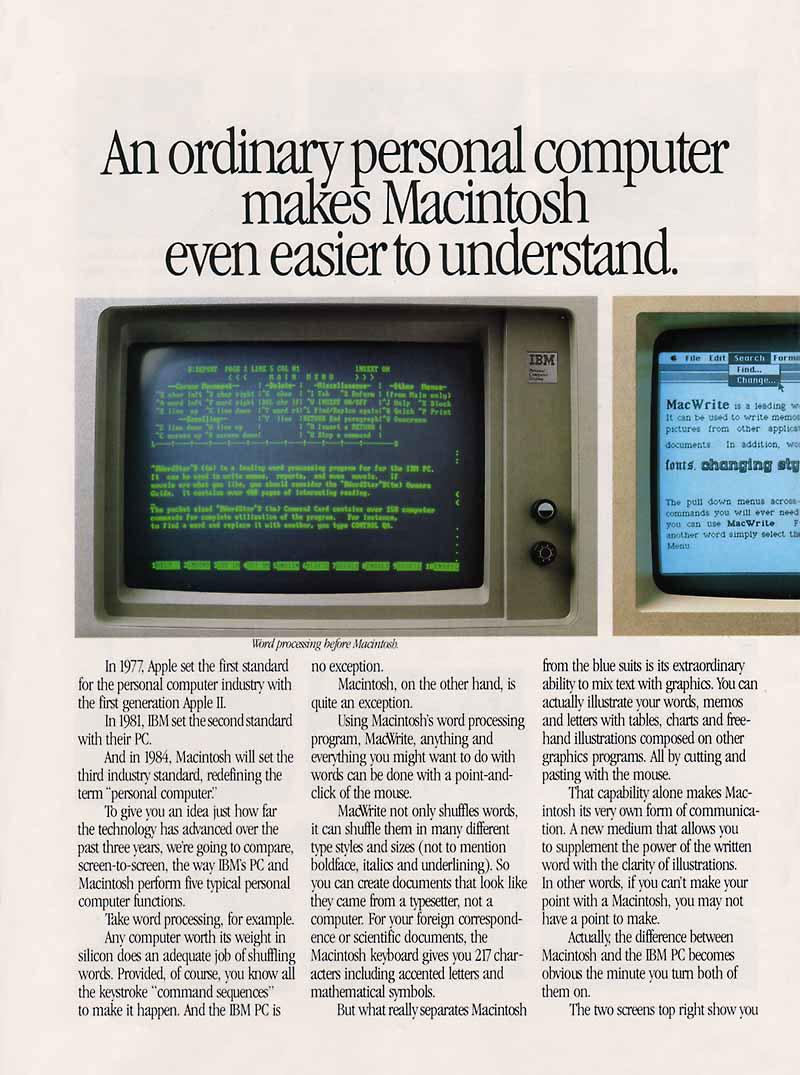

Building an app for iOS is a far cry from how things worked on the original IBM PC. Back in the early ’80s, the open world of PCs shipped with a minimal Disk Operating System that did little more than provide access to a storage drive and facilitate loading a program into memory to effectively take over full operation of the PC.

In that context, one of the most remarkable things Apple introduced with its new desktop computing model of the Lisa and Macintosh was the idea of restrictive user interface guidelines that defined how the entire system should work. This included lots of shared code Apple included in its Mac OS, at the time referred to as its System Software.

Unlike a simple DOS, the Mac’s System Software supplied a series of code libraries or “toolboxes” that developers could access to perform many of the common functions of the system. The result would be that software titles would all look and work consistently, because it was mostly Apple’s own code drawing the overall interface, handling printers, providing access to the network, and so on.

This novel software model was first introduced on the Lisa, which also shipped with a broad set of Office productivity software Apple had written. Apple bundled so much ready-to-use free software for Lisa that the company grew worried that there might not be enough opportunity left for third party developers. That prompted the company to hold back with the Macintosh, and instead work with third party developers to deliver a range of titles users could buy after their hardware purchase.

For years, Apple continued this philosophy of restraining its own bundled software offerings so as not to step on the toes of its third party developers. At the same time, it continued to embellish the Mac’s System Software to perform a greater variety of functions that developers could draw upon.

This included sophisticated digital video support with QuickTime, advanced typesetting in QuickDraw GX, and even early support for virtual reality models and scenes with QTVR.

By the mid 1990s, Apple was increasingly building expensive to develop software fundamentals, and allowing third party developers to earn revenue using this code, essentially for free. Meanwhile, it struggled to sell macOS as a retail product. Developers were making more money off the Mac and Apple’s software than Apple was.

Among the companies making more from the Mac than Apple was Microsoft. It ultimately decided to appropriate all of Apple’s work to deliver Windows on the IBM PC.

At that point, Apple was not only giving away its code for free, but forced to compete with a knockoff of its entire platform. Even worse, key Mac developers were leaving Apple to devote their efforts to making software for Windows.

For many years, Apple struggled with the conundrum of how it could invest in building new macOS software and get users to pay for updates, and at the same time, build its own first party apps that might compete with third party offerings and push even more developers away from its platform. The problem of Apple coddling its developers at its own expense and detriment was finally resolved by iPod.

iPod ends open third party development

Unlike the Mac, iPod shipped as complete system package, updated on a regular basis with first party functions such as calendar, contacts, and notes. Returning to how the Lisa was delivered, iPods were a ready to use package that didn’t reply on third party developers.

Third party games were only later made available in partnership with a few specific developers, and sold through iTunes where Apple could earn a cut of their revenues.

When Apple introduced iPhone, it was again in the same model as Lisa — bundled with a full suite of ready to use apps. The next year, Apple’s new App Store opened the door to initially quite whimsical third party apps from games to fart sounds and pretend beers. The serious apps on iPhone were from Apple: Maps, Mail, Messages and so on.

As third party developers started delivering more consequential and useful apps that sold for real money, Apple began charging a cut of their revenues to help pay for the development of iOS, its development tools, and the App Store itself. This was the same model employed in selling songs, videos, and games in iTunes. This proved to be wildly successful for Apple, and helped to deliver regular, fresh advances in iOS that helped keep iPhones rapidly refreshed and competitive.

At the same time, third party developers were able to sell large quantities of their apps for relatively little, earning sustainable profits for their work. Writing and maintaining similar software for personal computers had often been far less profitable due to piracy, particularly for small and specialized niche developers.

The App Store had solved major business model problems that had repressed the development of the Mac and had thwarted the development and interest of third parties.

The App Store changed everything for everyone

Apple’s successful model of software distribution and sales through the iOS App Store was subsequently copied by the increasingly benevolent dictatorship of Microsoft’s Windows as part of its efforts to desperately liberalize itself in the model of Apple.

Microsoft belatedly realized it needed to mimic Apple’s nimble strategies to avoid dying out as a dinosaur stuck in the mud because it had devoted too much attention into eating everything and had not invested sufficient efforts into strategically planning for the future or adapting to a changing environment.

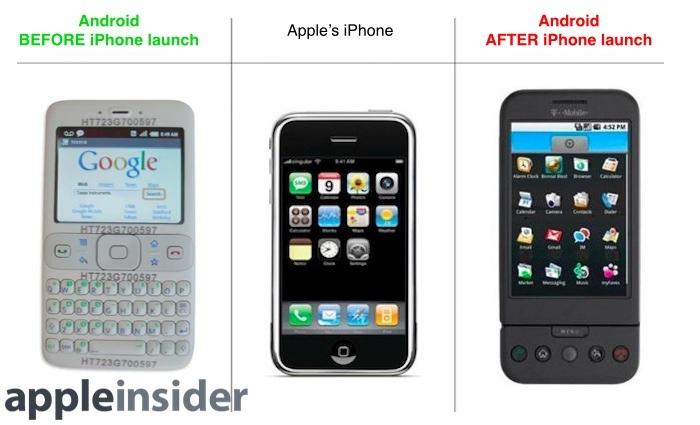

The App Store model of sharing a revenue cut from developers was even adopted by Google’s Android. Despite portraying themselves as a collective of pirate anarchists who want unrestrained open chaos, Android enthusiasts really just want a copy of Apple’s iPhone, but don’t want to pay for it.

Google, Microsoft, and many others would also like to take over Apple’s valuable App Store territory on iOS because they could make more money selling apps to Apple’s customers than on their own platforms, particularly if they could figure out how to force Apple to give them prime retail space without paying anything for it. But wanting something doesn’t mean you have a right to it.

Monopolies on vacation

This reminds me a third analogy I encountered in Thailand over the last month of traveling. In addition to the lessons of half-building unsustainable towers and in contrast to the impressive Retail Store Apple just completed at IconSiam, the third infrastructure example that struck me was the construction of a small private island airport in Koh Samui.

Tourism on the island brings in a steady flow of air traffic, but the quite-rural island and its little airport have limited capacity to handle huge volumes or very large planes. The government of Thailand built an enormous new airport in Bangkok to serve its massive capital city, but the smaller regional airport in Koh Samui is actually owned by Bangkok Airlines, which build this infrastructure for its own use.

This airline has an effective monopoly over air traffic to the island, so if you want to fly in, you have one option to buy tickets. There are alternative ways to get there, including a very long train ride or trip by car, or flying into an other airport and taking busses and a ferry to reach it. There are also a lot of other islands and other destinations one could alternatively choose to go to for many of the same experiences.

Is this a problem to be solved by the government? Certainly Thailand could build its own public airstrip. This would involve major expenses in finding the land and justifying the investment when a sufficiently functional airport already exists.

Other airlines could also put their own money into building a rival airport, again with limited benefit for anyone and likely vast environmental cost to the island and its population.

I ended up having to pay a minor premium to fly into Koh Samui rather than to another airport served by multiple competitive airlines all fighting to lower ticket prices. It would have been really unrealistic to try to avoid paying this premium by taking a much longer, inconvenient route— and certainly by building my own airport!

It is also likely that if the country built a large new public airport that it would have to charge such steep usage fees and other taxation that customers wouldn’t even benefit from any cost reductions, even with the prospect of new competition. Perhaps there isn’t enough air traffic to support multiple airlines all trying to profitably support their own fleets of planes. Perhaps there shouldn’t be.

Sometimes identifying a problem doesn’t mean that the solution you propose is workable, reasonable, cost effective, or even necessary. Users and developers have often complained about elements of the App Store they didn’t like. Many of these issues have been incrementally addressed by Apple.

Some of them are only coming from a small minority of rich developers who want to make more money using Apple’s framework code, development tools, and retail store without paying anything.

Apple’s own battles with monopolies

Apple itself has frequently faced monopolies — or inhospitably crowded markets — of its own. In addition to successfully battling the Great Monopoly of Microsoft’s Windows and defeating Microsoft’s threatened control over commercial music and video, the App Store itself is another example of how to beat an effective monopoly or towering barriers to adoption. It didn’t require government involvement.

In the smartphone era before iPhone, the established mobile app platform was effectively JavaME and Flash Lite. Virtually all mobile devices had to bundle both to be taken seriously, because mobile devices of the time could barely even run a full web experience, and certainly not the full Windows API. Java and Flash were so intrenched that the main question from tech journalists in 2007 was how and when would iPhone run Java and Flash?

The answer was never. Instead, Apple developed a better solution to mobile software.

For the first six months, this leaned on the familiar web tools for building apps, which Apple had already created in Safari and WebKit. But Apple also had developed a full platform of even more powerful app frameworks for the Macintosh.

Across 2007 and up to the release of the App Store early the next year, it became increasingly obvious that Apple’s plans for the future of mobile devices was not to invest in the horrible mess of Java and Flash, but instead to enable an optimized version of much its existing Mac platform run on its relatively powerful mobile devices.

This was such an impressive undertaking that computers initially refused to believe Apple had done this. They preferred to believe this was just a marketing trick. They quickly realized that not only that they were wrong about Apple, but that they were also completely unprepared to do anything similar.

Apple didn’t just set up a gatepost and charge developers rent. It developed the most valuable mobile platform with sophisticated software that was worth more than it was charging.

For developers, the iOS App Store was a smoking great deal even with the minor cut Apple was charging.

The cheap and dirty alternative

Conversely, Google did the opposite. Rather than invest in building its own sophisticated computing platform, Google did what Microsoft had done a generation earlier: it appropriated the solution that already existed and invested just enough in hopes others would do the work to make it rich.

Instead of copying the appearance of Apple’s Mac to deliver Windows on top of DOS as Microsoft did in the early ’90s, Google copied the appearance of iPhone to deliver Android on top of an illicit fork of JavaME. Microsoft at least owned DOS; Google maliciously and shamelessly stole Sun’s Java shortly before Oracle paid for it.

What Java shared in common with MS-DOS was that both were garbage and ill-suited to building a foundation for the future of personal computing. Rather than working to build something really great, as Apple had, Google had copied Microsoft’s efforts to do the least work possible and just install itself as a monopoly that could charge money for owning the underlying technology.

Google specifically wanted to dethrone Microsoft and get around its threats to monopolize all control of web search and advertising under Windows Vista and newly emerging mobile devices. While it coached Android as a free and “open source” alternative to the evil Microsoft monopoly, and perhaps also the threat of another proprietary OS from Apple, Google demonstrated that its free and open philosophy was total poppycock posturing when it threw its support behind Adobe’s proprietary Flash.

Three years after iOS and its App Store had victoriously proven that iPhones didn’t need Flash at all, Apple introduced iPad with the same model of native iOS mobile apps and open access to web apps. Developers that didn’t want to participate in Apple’s iOS App Store but still wanted to get their software on iPads could develop their own web apps, as Facebook notably did for years.

Google had delivered Android as a rip-off of JavaME, with non-native apps that were compiled by an illegitimate appropriation of Java runtime code written and owned by Sun/Oracle. That made it just as inauthentically shameless for the purported open source greenwasher to adopt the fully proprietary Flash as the development platform of Google tablets hoping to compete with Apple’s iPad as the 2010s began.

There was nothing to compile or customize or create your own fork of in Google’s implementation of Android Flash. It was if they’d licensed Windows and the open source crowd of Android fanatics were still celebrating and setting off fireworks because all of their sacrosanct guiding principles and great morality of superiority were fake and had been all along.

Watching Google’s flock of Android open source advocates all worshiping at the alter of proprietary Flash as their new savior was nearly as comical as watching their ideology crash and burn in an exhaustively long and embarrassing fire that blazed on for years because their Dear Leader had integrated Flash so tightly into the bowels of Android.

In the end, was clear that not only should Flash not be in any mobile device, but that it should also be ripped from every personal computer and thrown in the dustbin of history. This finally enabled Apple to remove it from the Mac desktop.

Rather than competing against iOS as a platform, Flash had proven that it was itself a terrible fiefdom monopoly that needed to be crushed.

This was virtually single handily done by Apple as its competitors and the tech media booed and moaned that Apple should also adopt Flash and continue its miserable reign over web videos and rich web apps. Rather than earnestly working to support Flash on mobiles, tablets, and the web as Microsoft and Google had, Apple instead pushed an open industry initiative: HTML5 interactivity paired with H.264 video.

Apple not only took on its big competitors pushing Flash, but also subverted the incessant rage of tech media journalists who insisted that Apple was harming customers by refusing to force feed them Adobe’s proprietary Flash as the only way to do anything.

When members of tech media identify “a monopoly” as a problem to be fixed, perhaps they don’t know what they’re talking about. Perhaps there’s nothing really inherently bad with monopolies and proprietary ownership of code or technologies, and the real problems lie with abuses of one’s position or market power. Perhaps proposed solutions to a problem aren’t really answers but really just complaints ungrounded in reality.

Perhaps Apple’s App Store is, like the Koh Samui airport, just right for the island it serves, and if you really hate having to pay for it you should visit another island instead. Perhaps the solution to issues of monopoly control of any kind is to offer a compelling, attractive and better alternative, as Apple has time and again.

Perhaps governments breaking up “a big monopoly” to introduce smaller solutions that don’t really result in functional markets but only make things worse is not the best solution, and the real solution is to enable, new competitive markets that earn their own customers.

This all happened before.

This story originally appeared on Appleinsider