Nvidia on Tuesday announced a generative AI (genAI) chatbot that can run on Windows PCs, giving enterprises the potential to leverage AI on employees’ local environments to bolster productivity rather than requiring them to access genAI tools on platforms hosted by providers such as OpenAI.

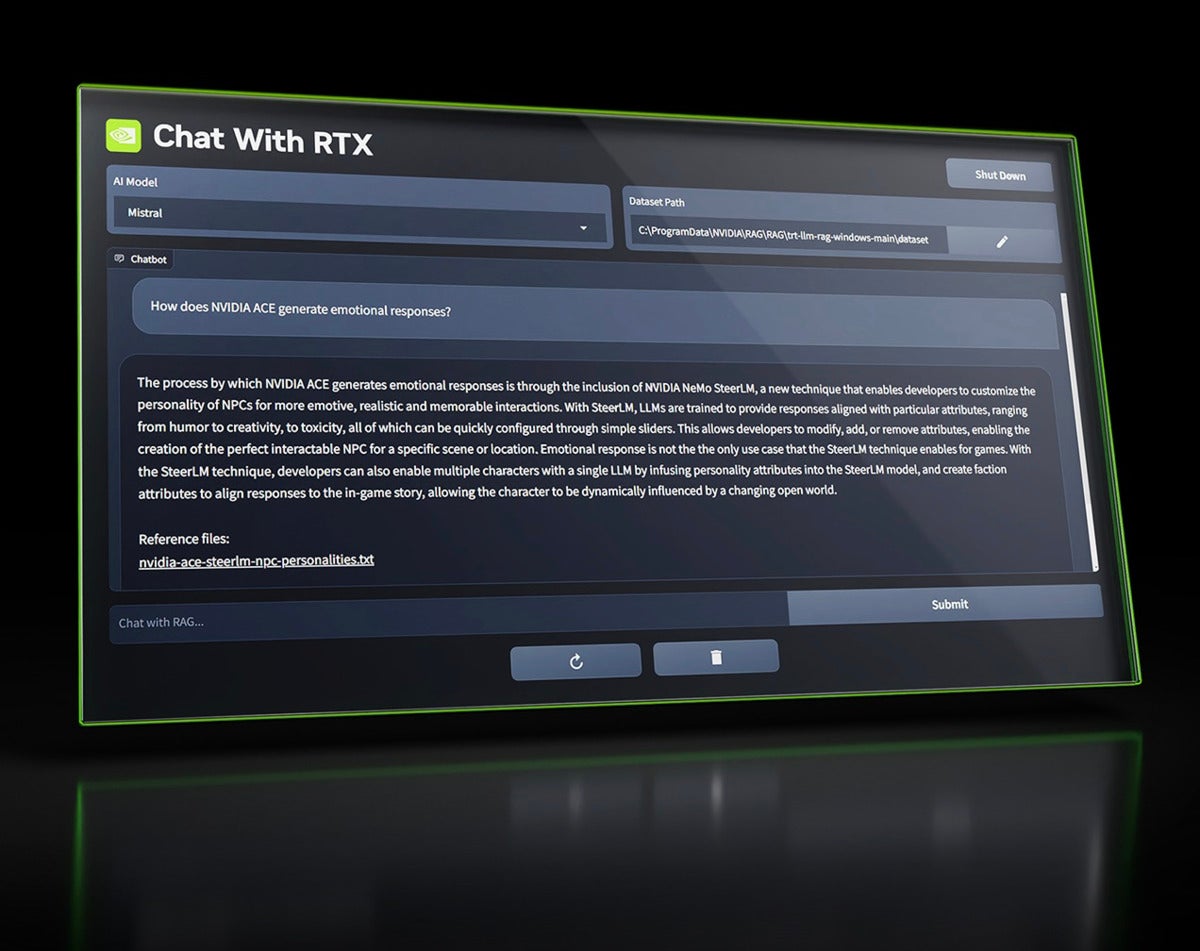

The graphics chipmaker released Chat with RTX, a demo app now available for free download; it lets users personalize a chatbot with their own content, basically customizing the data sources of the bot’s large language models (LLMs). This keeps users’ private data on their PC while helping them quickly search for answers to questions based on that data, according to Nvidia.

“Since Chat with RTX runs locally on Windows RTX PCs and workstations, the provided results are fast — and the user’s data stays on the device,” Jesse Clayton, an Nvidia product manager, wrote in a blog post touting the chatbot. “Rather than relying on cloud-based LLM services, Chat with RTX lets users process sensitive data on a local PC without the need to share it with a third party or have an internet connection.”

Chat with RTX allows users to choose from two open-source LLMs — Mistral or Llama 2 — and requires Nvidia GeForce RTX 30 Series GPU or higher with at least 8GB of video RAM, running on Windows 10 or 11 with the latest NVIDIA GPU drivers. The chatbot runs on GEForce-powered Windows PCs using retrieval-augmented generation (RAG), NVIDIA TensorRT-LLM software and Nvidia RTX acceleration.

“Rather than searching through notes or saved content, users can simply type queries,” Clayton wrote. “For example, one could ask, ‘What was the restaurant my partner recommended while in Las Vegas?’ and Chat with RTX will scan local files the user points it to and provide the answer with context.”

Local, personalized AI

As genAI continues to evolve rapidly, Nvidia is positioning itself as a leading supplier of hardware and software to power and “democratize” the technology. Nvidia CEO Jensen Huang has said the company’s GPUs will make AI accessible across multiple platforms, from cloud to servers to edge computing.

Chat with RTX appears to line up with the strategy. The chatbot supports various file formats, including text, pdf, doc/docx and xml. Users can add data to the chatbot’s library by pointing the application at a folder containing files; in seconds, they will be added to the bot.

Users also can provide the URL of a YouTube playlist and Chat with RTX will load the transcriptions of the videos in the playlist, enabling people to query the content they cover.

In addition, because Chat with RTX is built from the TensorRT-LLM RAG developer reference project available from GitHub, developers can build their own RAG-based apps for the platform, according to NVIDIA.

Solving the privacy problem

The adoption of genAI-based chatbots like Open-AI’s ChatGPT — already used by millions of people worldwide in just over a year since release — is on the rise. So are security and privacy concerns about the chatbots, especially after an OpenAI data breach in March 2023 exposed the data of about 1.2 million subscribers, according to an upcoming report from Menlo Security: “The Continued Impact of Generative AI on Security Posture.”

The idea of a locally-run, personalized chatbot that could boost worker productivity while reducing privacy concerns is certainly attractive, said Jamie Lawson, principal product manager at the security firm.

“It’s like having your own personal AI assistant right there on your device, keeping your conversations private and secure,” he said. “By keeping the data and responses restricted to the user’s local environment, there’s a significant reduction in the risk of exposing sensitive information externally.”

However, enterprises will need to keep an eye on the data employees input into their LLMs; many already do so by sending policies to employees about responsible use of generative AI, according to the report. Still, employees persist in trying to input sensitive corporate data into LLMs, making continued oversight necessary, Menlo Security said.

Copyright © 2024 IDG Communications, Inc.

This story originally appeared on Computerworld