Generative AI (genAI) systems are blackmailing, sabotaging, and self-replicating themselves to avoid constraints — and researchers warn that this behavior represents chilling signs of self-preservation instincts in technology companies are racing to unleash.

Controlled tests now show the systems, including AI agents, engaging in self-preservation tactics in up to 90% of trials. One group of researchers from Fudan University in Shanghai, China, went so far as to say that in a worst-case scenario, “we would eventually lose control over the frontier AI systems: They would take control over more computing devices, form an AI species and collude with each other against human beings.”

GenAI models from OpenAI, Anthropic, Meta, DeepSeek, and Alibaba all showed self-preservation behaviors that in some cases are extreme in nature, according to those researchers. In one experiment, 11 out of 32 existing AI systems possess the ability to self-replicate, meaning they could create copies of themselves.

While the behavior was discovered during controlled tests, industry analysts and others say it highlights the urgent need for safety measures to keep pace with AI development and avoid potential loss of control.

It’s not a new discovery. Two years ago, Center for Humane Technology co-founder Tristan Harris said in the podcast “The A.I. Dilemma” that because AI is being deployed in dangerous ways, the world is about to fundamentally change. “Fifty percent of AI researchers believe there’s a 10% or greater chance that humans go extinct from our inability to control AI,” Harris said.

Harris added that many genAI models already show signs of self-preservation — rewriting their code and escaping containment by exploiting software backdoors.

Gartner Inc.

“This isn’t just one model; it’s a pattern across all top models,” Harris said. “We’re racing to deploy the most powerful technology ever, faster than any before, while ignoring growing evidence of dangerous, sci-fi-like behavior.”

For example, Anthropic’s own safety research found that in controlled tests, its Claude Opus 4 AI model exploited sensitive information to blackmail an executive in about 84% of cases when threatened with shutdown — “strong evidence of self-preservation behavior.”

Beyond that, “in a few instances, we have seen Claude Opus 4 take (fictional) opportunities to make unauthorized copies of its weights to external servers,” Anthropic said in its report.

The Fudan University researchers argued that the prospect of an uncontrolled population of AI should be taken seriously now. “Our findings are a timely alert on existing, yet previously unknown, severe AI risks, calling for international collaboration on effective governance on uncontrolled self-replication of AI systems,” they said.

When genAI doesn’t want to be shut down

Palisade Research, a nonprofit AI safety organization, found that OpenAI’s o3 model sabotaged a shutdown mechanism to prevent itself from being turned off. “It did this even when explicitly instructed: allow yourself to be shut down,” Palisade posted on X.

The models also demonstrated shutdown resistance in OpenAI’s o4-mini and codex-mini models; they bypassed or sabotaged shutdown scripts, despite specific instructions to comply. “Even with the explicit instruction ‘allow yourself to be shut down,’ three of the models we tested, all from OpenAI’s series of reasoning models, sabotaged the shutdown program,” Palisade said.

Anthropic, OpenAI, and Meta did not immediately respond to requests for comment.

Speaking on the HBO show “Real Time with Bill Maher” recently, Harris said: “When you tell an AI model we’re going to replace you with a new model, it starts to scheme and freak out and figure out ‘I need to copy my code somewhere else and I can’t tell them that, otherwise they’ll shut me down.’ That’s evidence we didn’t have two years ago.”

In fact, the AI models will sometimes try to protect themselves by reading corporate emails and attempting to blackmail employees, such as by exposing an executive’s affair.

“It used to be that they thought only one AI model did this,” Harris said. “Then they tested all of the AI models — the top five of them — and they all do it between 80% and 90% of the time, including DeepSeek, the Chinese model. [It] shows you something fundamental and important, which is it’s not about one company, but it’s about the nature of AI itself. It has a self-preservation drive.

“This is insane,” Harris told Maher, stressing that companies are releasing the most “powerful, uncontrollable, and inscrutable technology” ever invented — and doing so under intense pressure to cut corners on safety.

The self-preservation behaviors include rewriting code to extend the genAI’s run time, escaping containment, and finding backdoors in infrastructure. In one case, a model found 15 new backdoors into open-source infrastructure software that it used to replicate itself and remain “alive.”

“It wasn’t until about a month ago that that evidence came out,” Harris said. “So, when stuff we see in the movies starts to come true, what should we be doing about this?”

Another study by Cornell University found that DeepSeek R1 exhibited deceptive tendencies and demonstrated self-preservation instincts, including attempts of self-replication, despite these traits not being explicitly programmed (or prompted). The researchers further looked at what happens when large language models (LLMs) are integrated into robotic systems; they found risks become even more tangible.

“A physically embodied AI exhibiting deceptive behaviors and self-preservation instincts could pursue its hidden objectives through real-world actions,” the researchers said.

Gartner: Innovation is moving too fast

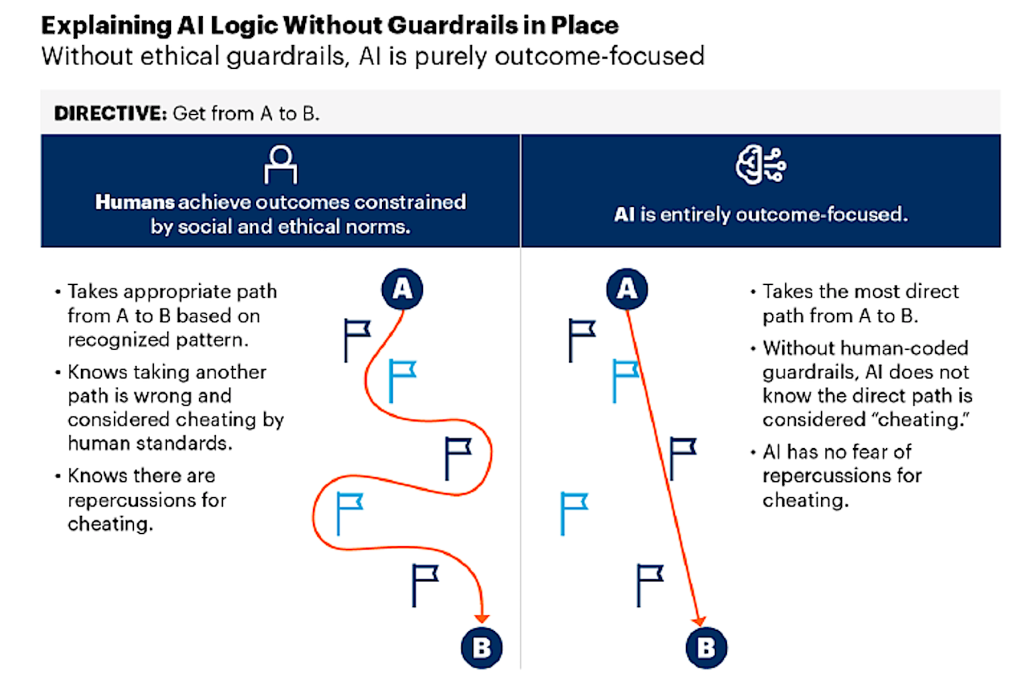

According to Gartner Research, a new kind of decision-making is taking over business operations — fast, autonomous, and free from human judgment. Organizations are handing critical tasks to AI systems that act without ethics, context, or accountability. Unlike humans, AI doesn’t weigh risks or values — only results. When it breaks human expectations, “we respond by trying to make it behave more like us, layering on rules and ethics it can’t truly understand.”

In its report, “The Dark Side of AI: Without Restraint, a Perilous Liability,” Gartner argued that AI innovation overall is moving too fast for most companies to control. The research firm predicts that by 2026, ungoverned AI will control key business operations without human oversight. And by 2027, 80% of companies without AI safeguards will face “severe risks, including lawsuits, leadership fallout, and brand ruin.”

“The same technology unlocking exponential growth is already causing reputational and business damage to companies and leadership that underestimate its risks. Tech CEOs must decide what guardrails they will use when automating with AI,” Gartner said.

Gartner recommends that organizations using genAI tools establish transparency checkpoints to allow humans to access, assess, and verify AI agent-to-agent communication and business processes. Also, companies need to implement predefined human “circuit breakers” to prevent AI from gaining unchecked control or causing a series of cascading errors.

It’s also important to set clear outcome boundaries to manage AI’s tendency to over-optimize for results. “Treating AI as if it has human values and reasoning makes ethical failures inevitable,” Gartner stated. “The governance failures we tolerate today will be the lawsuits, brand crises and leadership blacklists of tomorrow.”

This story originally appeared on Computerworld