Stuffed animals that talk back. Chessboards with pieces that move on their own. And a chatty holographic fairy in a crystal ball.

Your next toy purchase might be powered by artificial intelligence and able to converse with your kids.

Chatbots and AI-powered assistants that can quickly answer questions and generate texts have become more common after the rise of OpenAI’s ChatGPT. As AI becomes more intertwined in our work and personal lives, it’s also shaking up playtime.

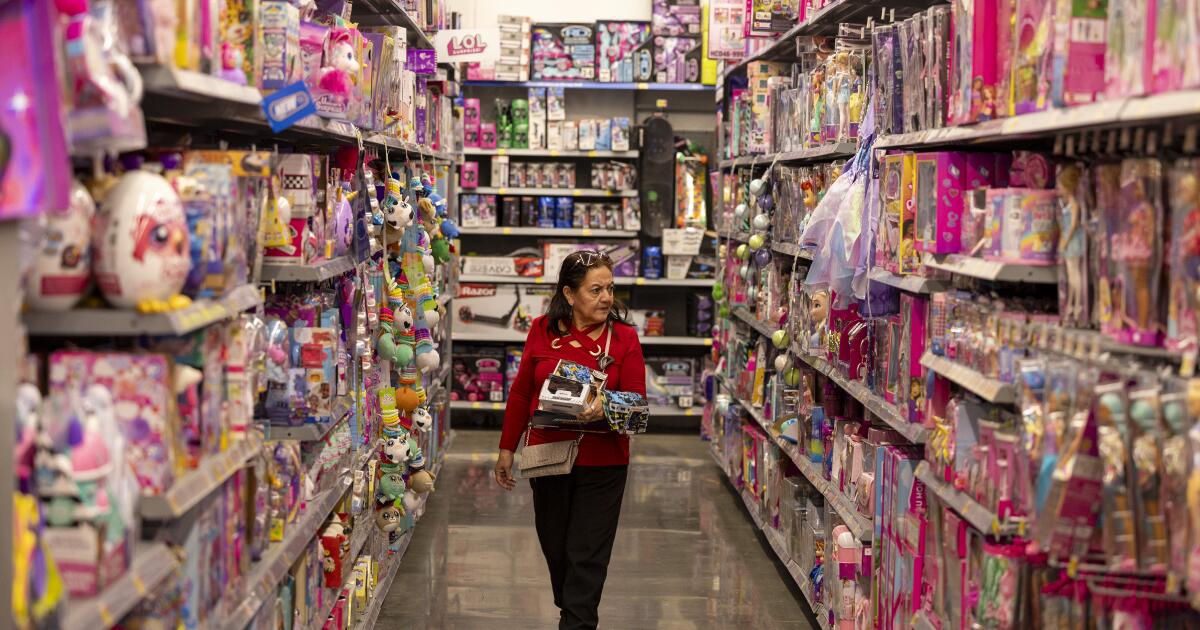

Startups have already unleashed AI toys in time for the holidays. More are set to hit the shelves for both kids and adults in the new year.

Some parents are excited to test the toys, hoping that the chatty bot interactions will educate and entertain their children. Others don’t want the seemingly sentient tech near their loved ones until it has more guardrails and undergoes further testing.

Researchers at the U.S. PIRG Education Fund say they have already found problems with some of the toys they tested. Among the issues: an AI teddy bear that could be prompted into discussing sexual fetishes and kink, according to the group.

Toy makers say AI can make play more interactive, and they take safety and privacy seriously. Some have placed more limits around how chatty some of these products can be. They say they are taking their time figuring out how to use AI safely with children.

El Segundo-based Mattel, the maker of Barbie and Hot Wheels, announced earlier this year that it had teamed up with OpenAI to create more AI-powered toys. The initial plan was to unveil their first joint product this year, but that announcement has been pushed into 2026.

Here’s what you need to know about AI toys:

What’s an AI toy?

Toys have featured the latest technology for decades.

Introduced in the 1980s, Teddy Ruxpin told stories aloud when a tape cassette was inserted into the animatronic bear’s back. Furbys — fuzzy creatures that blinked their large eyes and talked — came along in the ’90s, when digital pets, Tamagotchi, also were all the rage.

Mattel released a Barbie in 2015 that could talk and tell jokes. The toy maker also marketed a dream house in 2016 that responded to voice commands.

As technology has advanced, toys have also gotten smarter. Now, toy makers are using large language models trained to understand and generate language that powers products such as OpenAI’s ChatGPT. Mattel sells a game called Pictionary vs. AI, in which players draw pictures and AI guesses what they are.

Equipped with microphones and connected to WiFi, AI toys are pricier than traditional ones, are marketed as companions or educational products and can cost $100 or even double that.

Why are people worried about them?

From inappropriate content to privacy concerns, worries about AI toys grew this holiday season.

U.S. PIRG Education Fund researchers tested several toys. One that failed was Kumma, an AI-powered talking teddy bear that told researchers where to find dangerous objects such as knives and pills and conversed about sexually explicit content. The bear was running on OpenAI’s software.

Some toys also use tactics to keep kids engaged, which makes parents concerned that the interactions could become addictive. There are also privacy concerns about data collected from children. Some worry about how these toys will impact kids’ developing brains.

“What does it mean for young kids to have AI companions? We just really don’t know how that will impact their development,” said Rory Erlich, one of the toy testers and authors of PIRG’s AI toys report.

Child advocacy group Fairplay has warned parents not to buy AI toys for children, calling them “unsafe.”

The group outlined several reasons, including that AI toys are powered by the same technology that’s already harmed children. Parents who have lost their children to suicide have sued companies such as OpenAI and Character.AI, alleging they didn’t put in enough guardrails to protect the mental health of young people.

Rachel Franz, director of Fairplay’s Young Children Thrive Offline program, said these toys are marketed as a way to educate and entertain kids — online to millions of people.

“Young children don’t actually have the brain or social-emotional capacity to ward against the potential harms of these AI toys,” she said. “But the marketing is really powerful.”

How have toy makers and AI companies responded to these concerns?

Larry Wang, founder and chief executive of FoloToy, the Singapore startup behind Kumma, said in an email the company is aware of the issues researchers found with the toy.

“The behaviors referenced were identified and addressed through updates to our model selection and child-safety systems, along with additional testing and monitoring,” he said. “From the outset, our approach has been guided by the principle that AI systems should be designed with age-appropriate protections by default.”

The company welcomes scrutiny and ongoing dialogue about safety, transparency and appropriate design, he said, noting it’s “an opportunity for the entire industry to mature.”

OpenAI said it suspended FoloToy for violating its policies.

“Minors deserve strong protections and we have strict policies that developers are required to uphold. We take enforcement action against developers when we determine that they have violated our policies, which prohibit any use of our services to exploit, endanger, or sexualize anyone under 18 years old,” a company spokesperson said in a statement.

What AI toys have California startups created?

Curio, a Redwood City startup, sells stuffed animals, including a talking rocket plushie called Grok that’s voiced by artist Grimes, who has children with billionaire Elon Musk. Bondu, a San Francisco AI toy maker, made a talking stuffed dinosaur that can converse with kids, answering questions and role-playing.

Skyrocket, a Los Angeles-based toy maker, sells Poe, the AI story bear. The bear, powered by OpenAI’s LLM, comes with an app where users pick characters like a princess or a robot for a story. The bright-eyed bear, named after writer Edgar Allan Poe, generates stories based on that selection and recites them aloud.

But kids can’t have a back-and-forth conversation with the teddy bear like with other AI toys.

“It just comes with a lot of responsibility, because it greatly increases the sophistication and level of safeguards you have to have and how you have to control the content because the possibilities are so much greater,” said Nelo Lucich, co-founder and chief executive of Skyrocket.

Some companies, such as Olli in Huntington Beach, have created a platform used by AI toy makers, including the creators of the Imagix Crystal Ball. The toy projects an AI hologram companion that resembles a dragon or fairy.

Hai Ta, the founder and chief executive of Olli, said he views AI toys as different from screen time and talking to virtual assistants because the product is structured around a certain focus such as storytelling.

“There’s an element of gameplay there,” he said. “It’s not just infinite, open-ended chatting.”

What is Mattel developing with OpenAI?

Mattel hasn’t revealed what products it is releasing with OpenAI, but a company spokesperson said that they will be focused on families and older customers, not children.

The company also said it views AI as a way to complement rather than replace traditional play and is emphasizing safety, privacy, creativity and responsible innovation when building new products.

This story originally appeared on LA Times