Apple Vision Pro and later headsets can offer high refresh rates in their screens, Apple proposes, by using a ‘foveated display’ to optimize rendering to focus only where the user is actively looking.

One of the key elements of a virtual reality or augmented reality headset like Vision Pro is the use of displays with a high refresh rate. Screens that update quicker and allow for faster responses to user motion can minimize the potential of nausea from not updating the screen to match movements, as well as further selling the illusion of the virtual environment or digital object being placed in the world.

One of the problems with advancing the technology used for headset displays is that there is an expectation of using higher resolution screens. By adding more pixels to a display, that means there’s more elements that have to be updated each refresh, and more data that needs to be created by the host device in rendering the scene.

In a newly-granted patent, the filing for a “Foveated Display” aims to solve these problems by offering two different streams of data for a display to use, consisting of high-resolution and low-resolution imagery.

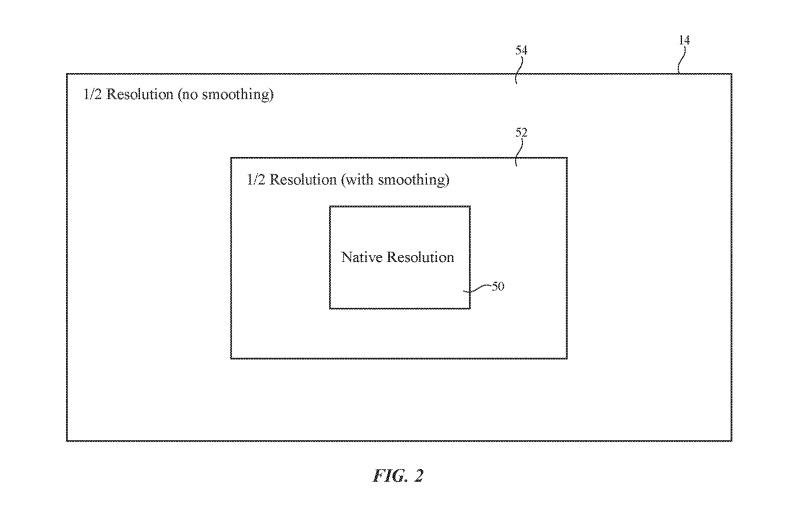

The core concept of a foveated display is that the screen does not have to use high-resolution imagery for the entire screen, only whatever the user is looking at. If the position can be determined, it is possible for a display to show the resource-heavy higher-resolution picture in the user’s direct view, then use the lightweight low-resolution data for the remainder of the screen.

As the rest of the display would take advantage of the user’s peripheral vision, which doesn’t require detail, this can significantly cut down on the amount of work that needs to be accomplished each time the display needs refreshing.

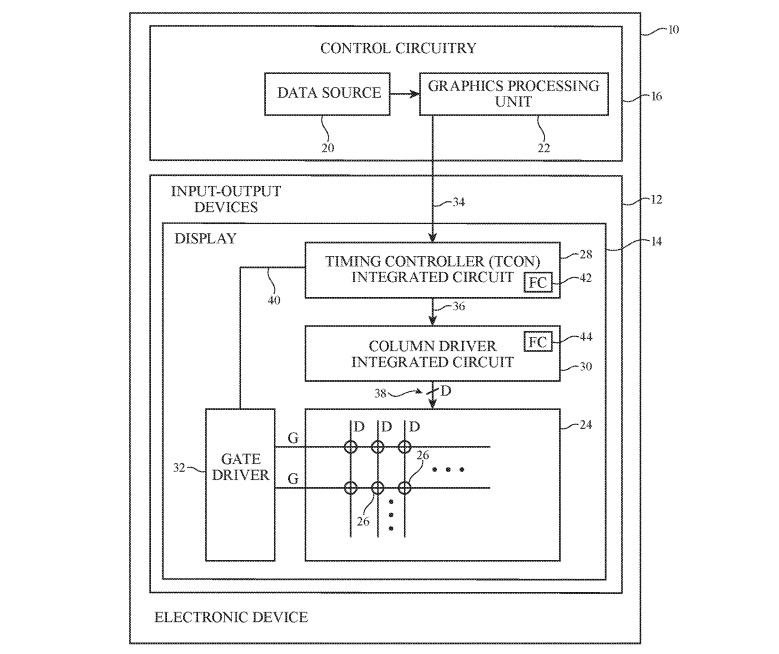

In Apple’s solution, a gaze-tracking system is used to find out the point on the screen the user’s eyes are trained on. Knowing this data, a graphics processing unit then renders a high-resolution image for part of the scene where the user is looking, as well as a low-resolution version for the remainder of the picture.

Using timing controller circuitry and column driver circuitry, the former can provide the image data to the latter, which then implements the changes to the display. This circuitry is also used to switch between two buffers, providing high-resolution data and low-resolution data, with each employed for their respective regions of the display.

The use of interpolation and filter circuitry could be used to alter the pixel data before it is applied, such as in areas where low-resolution data is used alongside the high-resolution version to even out any apparent seams. Two-dimensional spatial filters could also be applied to the low-resolution data buffer.

An illustration of how varied resolutions could be assembled on a display based on the user looking in the middle of the screen.

Although only now granted, Apple filed an application for this patent on January 15, 2021, long before Vision Pro was announced. That application was unusual in that the document talks specifically about headsets, it wasn’t one of the potentially hundreds of Vision Pro patent applications hiding in plain sight.

Related earlier filings for the latest discovery include a previous “Predictive, Foveated Virtual Reality System” that used a similar method of different-resolution video feeds and selective rendering to minimize latency to the user. Apple has also explored a variety of eye tracking systems, including some using a “hot mirror” allowing the components to be close to the user’s face rather than further away, making the headset more comfortable to wear for longer periods.

This story originally appeared on Appleinsider