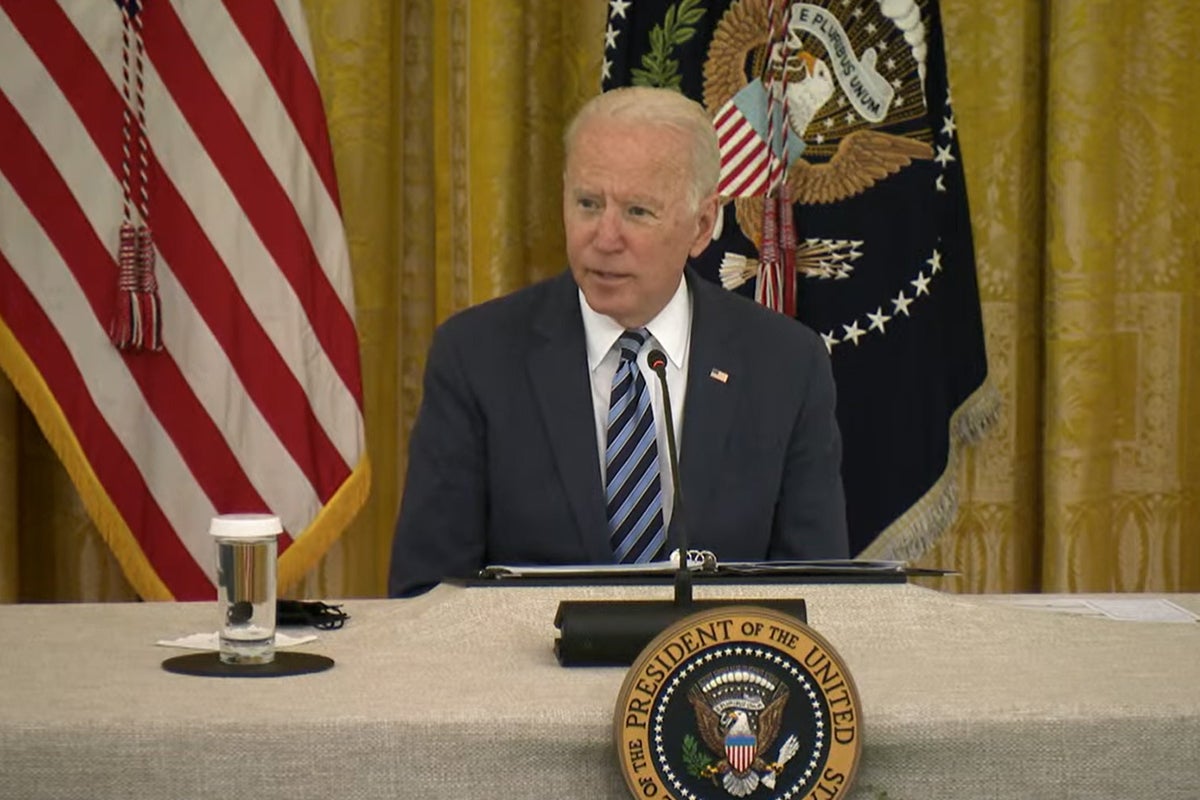

President Joseph R. Biden Jr. last month laid down a wide-ranging executive order targeting generative AI, dealing with everything from safety and security measures, to issues related to bias and civil rights, and oversight over how genAI is produced. On the surface, the order sounds like a comprehensive and powerful one.

But is it really? Microsoft, along with most other big genAI creators, welcomed the order, with Microsoft Vice Chair and President Brad Smith calling it “another critical step forward in the governance of AI technology…. We look forward to working with US officials to fully realize the power and promise of this emerging technology.”

He wasn’t alone. Other tech execs hailed it as well. Why? The New York Times put it this way: “Executives at companies like Microsoft, Google, OpenAI and Meta have all said that they fully expect the United States to regulate the technology — and some executives, surprisingly, have seemed a bit relieved. Companies say they are worried about corporate liability if the more powerful systems they use are abused. And they are hoping that putting a government imprimatur on some of their AI-based products may alleviate concerns among consumers.”

That brings up a basic question: Does Smith’s and other tech leaders’ support for government regulation mean we can feel secure AI will be deployed in a responsible way? Or are they pleased with Biden’s action because they’ll be left alone to do what they please?

To answer that, we first need to look into the details of the order.

Biden faces off against unregulated AI

Biden was blunt about why he issued the order: “To realize the promise of AI and avoid the risks, we need to govern this technology. There’s no other way around it.”

Presidents frequently use executive orders as a way make it appear they’re taking serious action, while doing little more than scoring political points. This time, it’s different. The genAI regulations are based on a carefully researched analysis of the many ways in which the technology could go off the rails and cause serious harm if allowed to be developed unfettered. They’re designed to erect guardrails around it.

The standards focus on multiple areas, the most important of which are safety and security, privacy, and equity and civil rights. Among the safety and security strictures are requirements that companies who develop the biggest AI systems — think Microsoft, Google, Facebook and OpenAI — must safety-test their systems and share the results with the government. That way, the order claims, the government can make sure the systems are safe and secure before they’re released.

Additionally, several government agencies, including the National Institute of Standards and the US Department of Homeland Security, will establish “red-team” testing standards overseeing “critical infrastructure, as well as chemical, biological, radiological, nuclear, and cybersecurity risks,” in the words of the order. Also important: standards for watermarking to label genAI content so people can know when something has been created by AI – a way to help stop the viral spread of AI-based misinformation.

As for privacy, the order calls on the federal government to support techniques to help ensure genAI systems can be trained while protecting the privacy of whatever is in the training data. It would also evaluate the way in which federal agencies collect and use commercial information such as from data brokers, to make sure that personally identifiable data is expunged.

Particularly important are the efforts to advance equity and preserve civil rights. The order looks to prevent landlords from using AI to discriminate against renters. It also calls for the development of “best practices on the use of AI in sentencing, parole and probation, pretrial release and detention, risk assessments, surveillance, crime forecasting and predictive policing, and forensic analysis.”

It also aims to protect the labor force and calls for the development of best practices to “prevent employers from undercompensating workers, evaluating job applications unfairly, or impinging on workers’ ability to organize.”

There’s a lot more, including grants for AI research in healthcare and climate change. It also makes it easier for companies to attract and hire AI talent from overseas.

That all sounds impressive — and it is. Tim Wu, a Columbia law professor and author, has frequently been a harsh critic of the ways in which he believes the government allows the tech industry to cause serious harm by the spread of misinformation on social media. He thinks it should also be far more serious about regulation, particularly when it comes to antitrust violations. As for Biden’s AI action, he wrote in a New York Times opinion piece: “Mr. Biden’s executive order outdoes even the Europeans by considering just about every potential [AI] risk one could imagine, from everyday fraud to the development of weapons of mass destruction.”

Microsoft and other tech firms sign on…for good reasons

So, does the support of Microsoft and other big tech firms mean Biden’s order is just a PR move and not the real thing? No, it doesn’t. It’s a rare case where tech regulations are not just good for the country, but good for tech companies like Microsoft, too.

It means people and businesses might be more willing to accept and use AI because they feel it’s safe and secure. For tech companies, that means more customers. And that means more profits.

They’re also good for tech firms because they’ll cut through the red tape and make it easier for them to attract AI talent from around the world.

Of course, keep in mind that the executive order by itself doesn’t have nearly as much bite as it you might think. In many cases, it covers only AI use by the federal government. Private companies could still try to evade many of the guidelines and regulations.

For it to have the greatest effect, Congress will have to act — not a foregone conclusion. You can be sure that if elected officials consider follow-up legislation to give the order more teeth, Microsoft and other bigwigs with AI ambitions will have their lobbyists out in force. As I’ve written before, Microsoft President Brad Smith and Sam Altman, CEO of OpenAI (in which Microsoft has invested $13 billion), are Congress’s favorite tech execs for advice on how to regulate generative AI.

So, it’s no wonder Microsoft is happy with Biden’s order. It will help assuage people’s fears about AI and allow the company to hire AI talent from overseas. And if Congress ever gets around to adding serious regulations, the company will have the biggest place at the table – and it can make sure it gets the regulations it wants.

Copyright © 2023 IDG Communications, Inc.

This story originally appeared on Computerworld