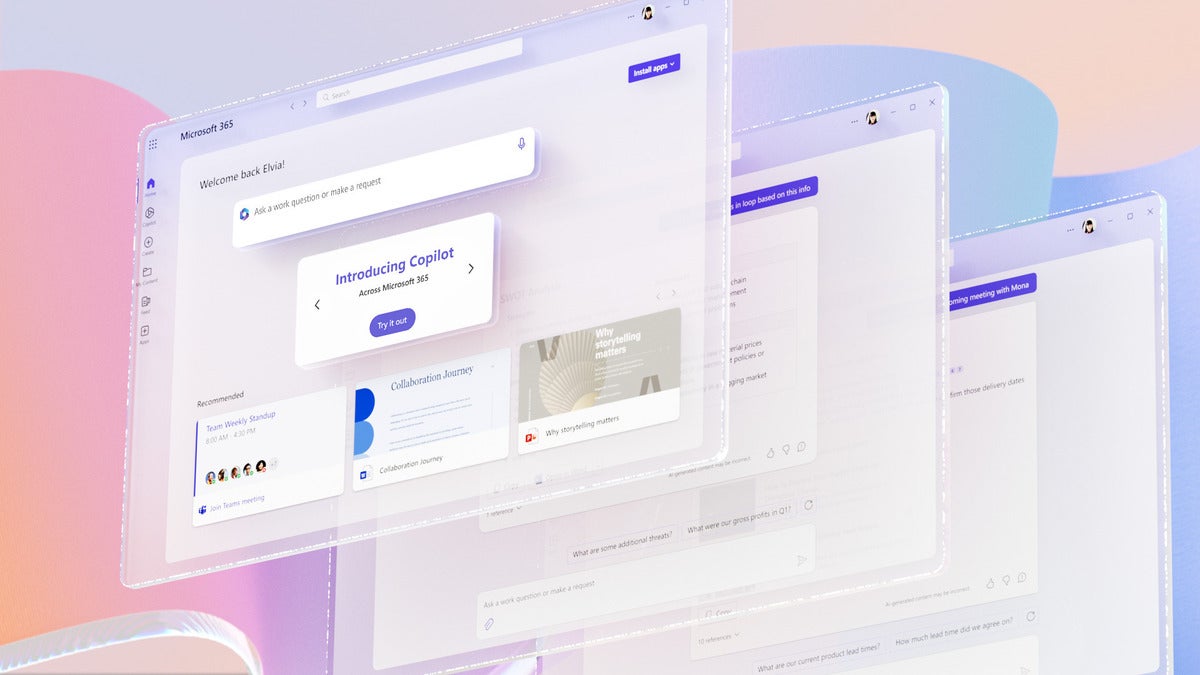

Since announcing a partnership with ChatGPT creator OpenAI earlier this year, Microsoft has been deploying its Copilot generative AI assistant across its suite of Microsoft 365 business productivity and collaboration apps. Word, Outlook, Teams, Excel, PowerPoint, and a range of other applications will be connected to the AI assistant, which can automate tasks and create content — potentially saving users time and bolstering productivity.

With the M365 Copilot, Microsoft aims to create a “more usable, functional assistant” for work, said J.P. Gownder, vice president and principal analyst at Forrester’s Future of Work team. “The concept is that you’re the ‘pilot,’ but the Copilot is there to take on tasks that can make life a lot easier.”

M365 Copilot is “part of a larger movement of generative AI that will clearly change the way that we do computing,” he said, noting how the technology has already been applied to a variety of job functions — from writing content to creating code — since ChatGPT-3 launched in late 2022. Whether or not Copilot will be the catalyst for a shift in how collaboration and productivity apps work remains to be seen, he said.

So far, there have only been demos of the Microsoft tool; it’s in early testing with a limited number of customers and is expected to be generally available later this year.

Though generative AI tools have proliferated in recent months, serious questions remain about how enterprises can use the technology without risking their data.

M365 Copilot is “not fully enterprise-ready, especially in regulated industries,” said Avivah Litan, distinguished vice president analyst at Gartner. She pointed to various data privacy and security risks related to the use of large language models (LLMs), which underpin generative AI tools, as well as their tendency to “hallucinate” or provide incorrect information to users.

What is Microsoft 365 Copilot?

The M365 Copilot “system” consists of three elements: Microsoft 365 apps such as Word, Excel and Teams, where users interact with the AI assistant; Microsoft Graph, which includes files, documents, and data across the Microsoft 365 environment; and the OpenAI models that processes user prompts: OpenAI’s ChatGPT-3, ChatGPT-4, DALL-E, Codex, and Embeddings.

These models are all hosted on Microsoft’s Azure cloud environment.

Copilot is just part of Microsoft’s overall generative AI push. There are plans for Copilots tailored to Microsoft’s Dynamics 365 business apps, PowerPlatform, the company’s security suite, and its Windows operating system. Microsoft subsidiary GitHub also developed a GitHub Copilot with OpenAI a couple of years ago, essentially providing an auto-complete tool for coders.

The key component of Copilot, as with other generative AI tools, is the LLM. These language models are best thought of as a machine-learning network trained through data input/output sets; the model uses a self-supervised or semi-supervised learning methodology. Basically, data is ingested and the LLM spits out a response based on what the algorithm predicts the next word will be. The information in an LLM can be restricted to proprietary corporate data or, as is the case with ChatGPT, can include whatever data it’s fed or scraped directly from the web.

The aim of Copilot is to improve worker productivity by automating tasks, whether that be drafting an email or creating a slideshow. In a blog post announcing the tool, Satya Nadella, Microsoft chairman and CEO, described it as “the next major step in the evolution of how we interact with computing…. With our new copilot for work, we’re giving people more agency and making technology more accessible through the most universal interface — natural language.”

There much optimism about the time-saving potential of AI in the workplace. A Stanford University and Massachusetts Institute of Technology study earlier this year noted a 14% productivity gain for call center workers accessing an (unnamed) generative AI tool; meanwhile, Goldman Sachs Research estimates that a generative AI-led productivity boom could add $7 trillion to the world economy over 10 years.

However, businesses should temper any high hopes for immediate benefits, said Raúl Castañón, senior research analyst at 451 Research, a part of S&P Global Market Intelligence. “There is significant potential for productivity improvement. However, I expect this will come in waves,” he said. “In the near term, we will probably see small improvements in employees’ day-to-day work with the automation of repetitive tasks.”

Though Copilot could save worker time by consolidating information from different sources or generating drafts, any productivity gains will come in “marginal increments.”

“Furthermore,” Castañón said, “these examples are activities that do not add value, i.e., they are overhead tasks that for the most part, do not directly impact the activities where value is created. This will come in time.”

Copilot pricing and availability

Microsoft’s Copilot is available to a small limited of Microsoft 365 customers now as part of its early access trial. Chevron, Goodyear, and General Motors are among those now testing the AI assistant.

While Microsoft has no set date for release, Copilot is expected to be widely available late this year. The Microsoft 365 roadmap states that Copilot in SharePoint will roll out to users beginning in November, but Microsoft declined to say whether this would mark the general availability date across the rest of the suite.

Pricing also remains unknown. The launch of a Premium tier for Teams, which is required to access AI features such as an “intelligent” meeting recap, speaker timeline markers, and AI note-taking, could indicate that Copilot will be available for higher-tier M365 customers. Microsoft declined to comment on availability.

Settling on a strategy is key to success. “The best of products can be strangled in the crib by bad licensing and poor accessibility,” said Gownder.

If, for example, Microsoft were to include Copilot as part of its E5 enterprise offering, many smaller businesses might not get access to the technology, slowing its overall growth.

“This is a big risk for Microsoft, in my view, because on the one hand they want everyone to use Copilot — if it’s not widely available, then it doesn’t become the de facto standard that everyone uses,” Gownder said. “But they also want to monetize it.”

How do you use Copilot?

There are two basic ways users will interact with Copilot. It can be accessed directly within a particular app — to create PowerPoint slides, for example, or an email draft — or via a natural language chatbot accessible in Teams, known as Business Chat.

Microsoft

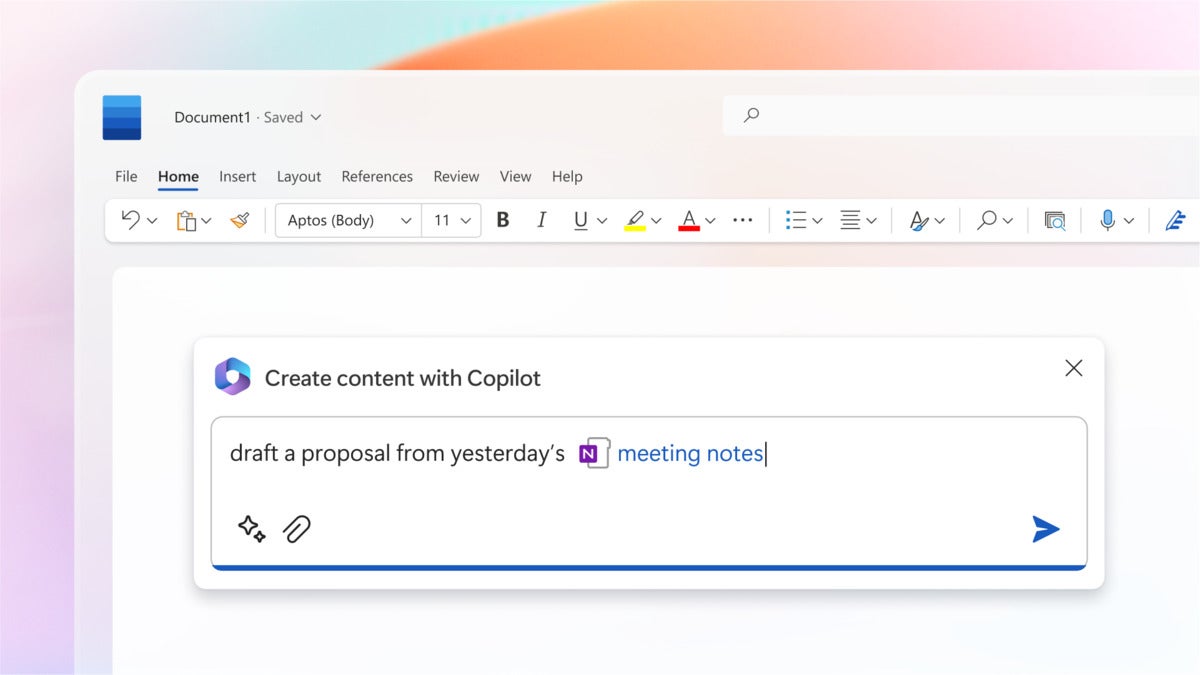

MicrosoftCopilotcan help a Word user draft a proposal from meeting notes.

Interactions within apps can take a variety of forms. When Copilot is invoked in a Word document, for example, it can suggest improvements to existing text, or even create a first draft.

To generate a draft, a user can ask Copilot in natural language to create text based on a particular source of information or from a combination of sources. One example: creating a draft proposal based on meeting notes from OneNote and a product roadmap from another Word doc. Once a draft is created, the user can edit it, adjust the style, or ask the AI tool to redo the whole document. A Copilot sidebar provides space for more interactions with the bot, which also suggests prompts to improve the draft, such as adding images or an FAQ section.

During a Teams video call, a participant can request a recap of what’s been discussed so far, with Copilot providing a brief overview of conversation points in real-time via the Copilot sidebar. It’s also possible to ask the AI assistant for feedback on people’s views during the call, or what questions remain unresolved. Those unable to attend a particular meeting can send the AI assistant in their place to provide a summary of what they missed and action items they need to follow up on.

In PowerPoint, Copilot can automatically turn a Word document into draft slides that can then be adapted via natural language in the Copilot sidebar. Copilot can also generate suggested speaker notes to go with the slides and add more images.

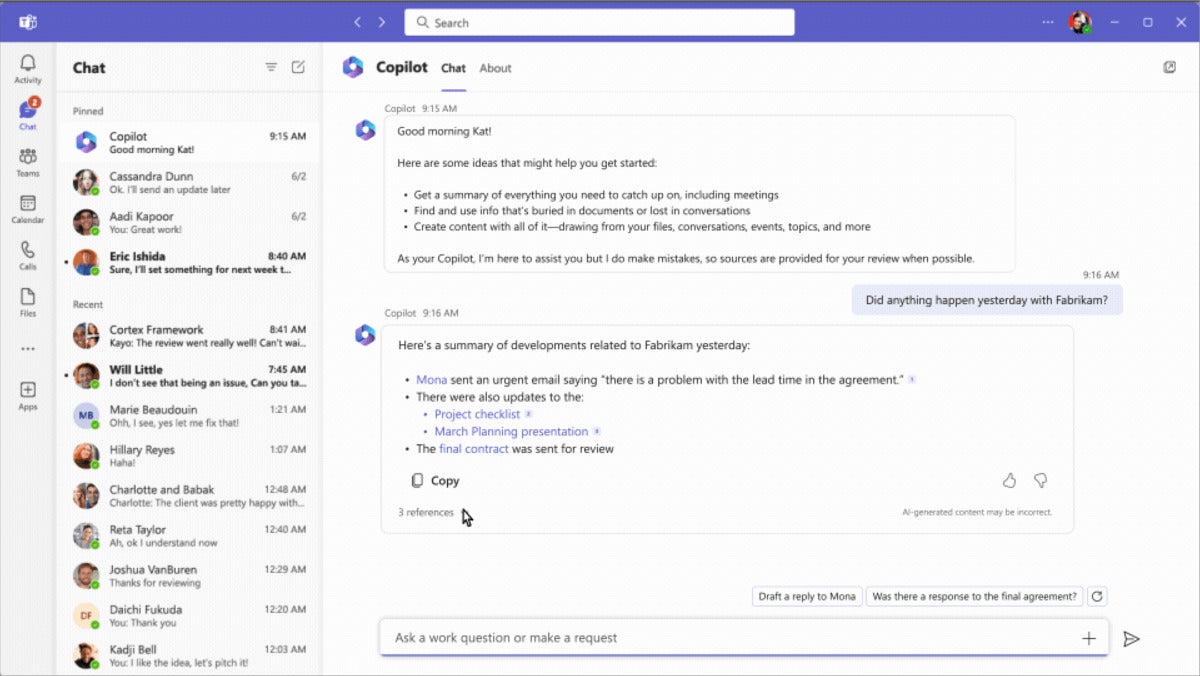

The other way to interact with Copilot is via Business Chat, which is accessible as a chatbot with Teams. Here, Business Chat works as a search tool that surfaces information from a range of sources, including documents, calendars, emails and chats. For instance, an employee could ask for an update on a project, and get a summary of relevant team communications and documents already created, with links to sources.

Microsoft

MicrosoftCopilot can aynthesize information from different sources about a project.

How does Copilot compare with other generative AI tools for productivity and collaboration?

Most vendors in the productivity and collaboration software market are adding generative AI to their offerings, though these are still in early stages. Google, Microsoft’s main competitor in the productivity software arena, has announced plans to incorporate generative AI into Workspace suite. Duet AI for Workspace, announced last month and currently in a private preview, can provide Gmail conversation summaries, draft text and generate images in Docs and Slides, for instance.

Slack, the collaboration software firm owned by Salesforce and a rival to Microsoft Teams, is also working to introduce LLMs in its software. Other firms that compete with elements of the Microsoft 365 portfolio, such as Zoom, Box, and Cisco, have also touted generative AI plans.

“On the vendor side, many are jumping on the generative AI bandwagon as evidenced from the plethora of announcements in the first half of the year,” said Castañón. “Despite the overhype [about generative AI], this indicates the technology is being rapidly incorporated into vendors’ product roadmaps.”

Although it’s difficult to compare products at this stage, Copilot appears to have some advantages over rivals. One is Microsoft’s dominant position in the productivity and collaboration software market. While competitors such as Cisco Webex and Grammarly may be comparable to Copilot in terms of accuracy, said Castañón, Microsoft’s ability to apply its AI assistant to a suite that already has a large customer base will drive adoption.

“The key advantage the Microsoft 365 Copilot will have is that — like other previous initiatives such as Teams — it has a ‘ready-made’ opportunity with Microsoft’s collaboration and productivity portfolio and its extensive global footprint,” he said.

Microsoft’s close partnership with OpenAI (Microsoft has invested billions of dollars in the company on several occasions since 2019 and has a large non-controlling share of the business), likely helped it build generative AI across its applications at faster rate than rivals.

“Its investment in OpenAI has already had an impact, allowing it to accelerate the use of generative AI/LLMs in its products, jumping ahead of Google Cloud and other competitors,” said Castañón.

What are the generative AI risks for businesses?

Along with the potential benefits of generative AI, businesses should consider risks. There are concerns around the use of LLMs in the workplace generally, and specifically with Copilot.

“At [this point], Microsoft 365 Copilot is not, in Gartner’s view, fully ‘enterprise-ready’ — at least not for enterprises operating in regulated industries or subject to privacy regulations such as the EU’s GDPR or forthcoming Artificial Intelligence Act,” Gartner analysts wrote in a recent report (subscription required).

While Copilot inherits existing Microsoft 365 access controls and enterprise policies, these are not always sufficient to address the risks posed by the use of LLMs, Gartner said. Several risks can arise when deploying Copilot in its current form, said Litan, one of the authors of the Gartner report.

She argued that additional controls are likely needed before Copilot launches.

Content filters to avoid hallucinations

One concern for businesses is the ability to filter information entered in an LLM by users and the results AI tools generate. Content filters are required, for instance, to prevent unwanted information being passed on to users, including “hallucinations” where LLMs respond with incorrect information.

“Hallucinations can result in bad information going out to other partners, your employees, your customers, and in the worst case it can result in malicious activity being spread around your whole ecosystem,” said Litan. “So you have to filter these outputs for policy violations, and hallucinations and malicious activity. There’s a lot that can go wrong.”

While Microsoft’s Azure OpenAI Service offer content-filtering options for specific topics (“hate,” “sexual,” “violence,” and “self-harm”), they’re not enough, said Litan, to eliminate hallucination errors, appropriation of copyrighted information, or biased results. Customers need to create filters customized to their own environment and business needs, she said.

But “there’s no ability [in Copilot] to put in your own enterprise policies to look at the content and say, ‘This violates acceptable use.’ There’s also no ability to filter out hallucinations, to filter out copyright. Enterprises need those policies.”

While third-party content filtering tools have begun to emerge, they’re not production-ready yet, said Litan. Some examples are AIShield GuArdian and Calypso AI Moderator.

A Microsoft spokesperson said the company is working to mitigate challenges around Copilot results: “We have large teams working to address issues such as misinformation and disinformation, content filtering, and preventing the promotion of harmful or discriminatory content in line with our AI principles,” the spokesperson said.

“For example, we have and will continue to partner with OpenAI on their alignment work, and we have developed a safety system that is designed to mitigate failures and avoid misuse with things like content filtering, operational monitoring and abuse detection, and other safeguards.”

The Copilot paid preview will be used to surface and address problems that arise before a wider launch. “We are committed to improving the quality of this experience over time and to make it a helpful and inclusive tool for everyone,” the spokesperson said.

Data protection is a must

Data protection is another issue because of the potential for sensitive data to leak out to an LLM. That happened when Samsung employees accidentally leaked sensitive data while accessing ChatGPT, prompting a company ban on OpenAI’s chatbot, Google’s Bard and Microsoft’s Bing.

Microsoft said Copilot can allow employees to use generative AI without compromising confidential information. According to the company, user prompt history is deleted after accessing Copilot, and no customer data is used to train or improve the language model.

However, businesses will need “legally binding data protection assurances” around this, Gartner suggested in its report.

Microsoft’s Azure shared responsibility model means customers take responsibility for securing their data, but that’s problematic when data is sent to the LLMs. “Users have complete responsibility for their data, but they have no control over what’s inside the LLM environment,” said Litan.

There are also compliance considerations for those in regulated sectors due to the Copilot LLM’s complex nature. Because so little is known publicly about LLMs, it’s difficult for companies to guarantee data is safe.

Prompt injection attacks are possible

The use of LLMs also opens up the prospect of prompt injections, where an attacker hijacks and controls a language model’s output — and gains access to sensitive data. While Microsoft 365 has enterprise security controls in place, “they’re not directed at the new AI functions and content,” said Litan. “The legacy security controls are definitely needed, but you also need things that look at the AI inputs and outputs. The model is a separate vector. It’s a different attack vector and compromise vector.

“Security is hardly ever baked into products during development until there is a breach,” she noted.

Microsoft said its team is working to address these issues. “As part of this effort, we are identifying and filtering these types of prompts at multiple levels before they get to the model and are continuously improving our systems in this regard,” the company spokesperson said.

“As the Copilot system builds on our existing commitments to data security and privacy in the enterprise, prompt injection cannot be used to access information a user would otherwise not have access to. Copilot automatically inherits your organization’s security, compliance, and privacy policies for Microsoft 365.”

How will Copilot evolve?

Ahead of a full launch, the company’s plan is to deploy its AI assistant across as many Microsoft apps as it can. This means generative AI features will also be available in a variety of tools, including OneNote, OneDrive, SharePoint, and Viva, among others.

Copilot will also be available natively in the Edge browser, and can use website content as context for user requests. “For example, as you’re looking at a file your colleague shared, you can simply ask, ‘What are the key takeaways from this document?’” said Lindsay Kubasik, group product manager for Edge Enterprise, in a blog post announcing the feature.

Microsoft also plans to extend Copilot’s reach into other apps workers use via “plugins — essentially third-party app integrations. These will allow the assistant to tap into data held in apps from other software vendors including Atlassian, ServiceNow, and Mural. Fifty such plugins are available for early access customers, with “thousands” more expected eventually, Microsoft said.

To help businesses deploy the AI assistant across their data, Microsoft created the Semantic Index for Copilot, a “sophisticated map of your personal and your company data,” and a “pre-requisite” to adopting Copilot within an organization. Using the index should provide more accurate searches of corporate data, Microsoft said. For example, when a user asks for a “March Sales Report,” the Semantic Index won’t just look for documents that include those specific terms; it will also consider additional context such as which employee usually produces sales reports and which application they likely use.

How can M365 customers prepare for Copilot?

Given the various challenges, businesses interested in deploying Copilot can start preparing now. (Microsoft recently touted a series of commitments to support customers in deploying AI tools within their organizations.)

“First of all, they should do proof of concepts and look at filtering products to try to minimize the risks from errors and hallucinations and unwanted outputs. They should experiment with these and use them,” Litan said.

Gownder suggested businesses consider providing guidance to employees about the use of generative AI tools. This is needed whether or not they deploy Copilot, as all businesses and IT departments will have to contend with employee use of generative AI tools.

“IT [might] say, ‘We’re banning ChatGPT from the corporate network,’ but everyone has a phone, so if you’re busy, you’re going to use that,” said Gownder. “So, there is some impetus to figure this out.”

One way to prepare employees is to educate them about the pitfalls of the technology. This is true for Copilot, the consumer version of ChatGPT, or any other chatbot an employee might use.

“If you ask ChatGPT to give you an answer to something, that’s a starting point, not an end point,” said Gownder. “Do not publish and use something straight out of ChatGPT. Any factual claim that the ChatGPT output makes, you need to double check it against sources, because it could be made up.

“This is all changing so quickly, so standing up programs to help people understand the basics of generative AI, and [also] the limits, will be good preparation no matter what happens with Copilot,” he said. “The Pandora’s Box is open: generative AI will be coming to you soon, even as ‘BYO’ [‘bring your own AI’] if you choose not to deploy it. So you better have a strategy in short order about this.”

Copyright © 2023 IDG Communications, Inc.

This story originally appeared on Computerworld